As technology progresses, it simultaneously becomes both more complicated and interconnected. Nowadays, cars, airplanes, medical devices, financial transactions and electricity systems rely on computer software to function properly. Because of this dependence on software code, artificial intelligence has become an increasingly important part of people’s lives.

AI has come a long way since it became a concept of science fiction books and movies. Scientists at the University of Oxford predict that artificial intelligence will be able to do many things better than humans within the next few years.

For example, by 2024, AI will be better than an average human being at translating languages, and by 2053 AI will be able to conduct surgeries. In other words, AI will eventually surpass human intellectual abilities in the next few years. These technologies improve business processes and increase efficiency.

What is Artificial Intelligence?

Artificial intelligence or AI is involved in the process of making a computer system “smart.” This is done by programming it to think like humans and mimic their actions. The term may also be used for machines exhibiting traits associated with human minds. These traits include problem-solving and learning.

There are many applications for artificial intelligence, such as finance, manufacturing and logistics. AI also offers innovative technologies in the medical sector.

This technology can help people make better decisions by analyzing data and providing insights that would otherwise be difficult to obtain. It can also help automate tasks that are time-consuming or tedious.

A key strength of artificial intelligence is its potential to be rational and take actions that are most likely to reach a target. Machine learning (ML) falls under AI’s umbrella when computer programs can learn independently after being exposed to new data instead of needing humans to intervene. With deep learning methods, modern computers can simultaneously handle lots of unstructured information, such as text files, pictures, or videos.

Machine Learning

Machine learning is a branch of artificial intelligence in which computers are designed to imitate human learning. Artificial intelligence systems carry out complex tasks by simulating how humans solve problems.

According to Boris Katz, a principal research scientist and head of the InfoLab Group at CSAIL, AI strives to create computer models that show “intelligent behaviors” similar to humans. Intelligent behaviors include machines that can identify a visual scene, natural language processing, or carry out a physical action.

Machine learning is responsible for predictive text, chatbots and language translation apps. It is also behind social media presents feeds to you. If it can do all those things just from data inputted by somebody learning something new, then there’s no telling what else autonomous machines will be able to do in the future.

Deep Learning

Deep learning is a form of artificial intelligence similar to human intelligence. Deep learning comprises complex machine learning algorithms within AI. It does not need human intervention since deep learning uses a neural network and big data to create outputs from patterns and give answers. Neural networks are connections that simulate the human brain and allow computers to learn and use logical reasoning based on information received.

The use of deep learning is evident in autonomous machines in transportation. It allows autonomous vehicles to detect obstacles and other objects on the road. Its algorithms also facilitate the use of facial recognition technology. Facial recognition is used for various purposes, from social media tagging friends to providing security measures.

Algorithms that use deep learning can still function well even if there are cosmetic changes, such as different hairstyles or bad lighting conditions.

History of Artificial Intelligence

Possessed Photography on Unsplash

Artificial intelligence (AI) is a rapidly developing field that began approximately 60 years ago. It comprises various sciences, theories and techniques that rely on mathematical logic, statistics, computational neurobiology, probabilities and computer science to mimic human reasoning and cognitive abilities.

AI was started during World War 2, but its subsequent development has been heavily reliant on computing advances. This led to the development of computers that perform tasks previously only possible for human beings.

Dawn of Artificial Intelligence Program

A surge in technological advancements was noted from 1940 to 1960. The period also saw a desire to understand to integrate machines and organic beings. The pioneer in cybernetics, Norbert Wiener, wanted to combine electronics, mathematical theory and automation into a control and communication theory in machines and animals.

The concept came after Walter Pitts and Warren McCulloch discussed networks of artificial neurons in a paper inspired by how the brain can perform simple logical functions. The paper would go on to influence computer-based neural networks, which are designed to mimic the way the brain works.

Even though Alan Turing and John Van Neumann did not come up with the term artificial intelligence, they became the founding fathers of this innovative technology. They changed from using computers that operated on 19th-century decimal logic to machines that ran off binary logic.

Turing Test

The two researchers designed the architecture of modern-day computers and proved they could operate any program. In 1950, Turing wrote an article called “Computing Machinery and Intelligence,” where he questioned whether machines could be intelligent.

To test this, he proposed an imitation game where human beings communicate with another human and a machine through a teletype dialogue. If the person couldn’t tell who was speaking to them, the machine must be considered intelligent. This method of inquiry was later called the Turing Test.

Although this article may be bashed by many for not being qualified, it will act as a landmark for future conversation starters discussing the line between humans and machines. Some people don’t believe in Turing’s test, but developing artificial intelligence that can pass it is still challenging.

Logic Theorist

John McCarthy of MIT coined the term artificial intelligence. Marvin Minsky of Carnegie-Mellon University defines artificial intelligence technology as building computer programs to do things that people currently do better because they need high-level cognitive processes like learning from perception, organizing memories, and thinking critically.

The Dartmouth Summer Research Project on Artificial Intelligence conference in 1956 saw the introduction of the Logic Theorist. The program was considered the first artificial intelligence program and was funded by Research and Development (RAND) Corporation. Cliff Shaw, Allen Newell, and Herbert Simon designed it to copy human problem-solving skills.

Increasing Popularity

AI made significant advancements from 1957 to 1974. Computers became more efficient, and data storage increased. Additionally, machine learning algorithms progressed, and people could better discern which algorithm best fit their needs. The successes convinced government agencies like the Defense Advanced Research Projects Agency (DARPA) to invest in AI research at various institutions across America.

In 1965, Dendral became the first AI expert system since it mimicked organic chemists’ decision-making processes and problem-solving behavior. Developed by Edward Feigenbaum and his colleagues at Stanford University, it aimed to study the formation of hypotheses and created empirical induction models.

Natural Language Processing

The government focused on creating artificial intelligence with speech recognition capabilities. It also wanted a computer program that could process data quickly. There was a lot of optimism and even higher expectations. In a 1970 interview with Life Magazine, Marvin Minsky said that a machine with the average intelligence of a human being may come out within three to eight years. But more work was necessary to achieve natural language processing, self-awareness, and abstract thinking. This comes even with the evidence that the concept may work.

In 1970, Waseda University in Japan created the first anthropomorphic robot, which they named WABOT-1. This machine had a limb-control system and a vision system. It also featured a conversation system.

However, James Lighthill reported about the state of artificial intelligence research to the British Science Research Council in 1973. He said the AI programs did not have the impact the authorities expected from them. As a result, funding for AI research decreased significantly.

But in 1976, Raj Reddy, a computer scientist, published an article summarizing work on Natural Language Processing (NLP). By 1978, John P. McDermott of Carnegie Mellon University developed the XCON (eXpert CONfigurer) program. This rule-based expert system facilitates ordering VAX computers from Digital Equipment Corporation. The program automatically selects the components based on a customer’s requirements.

Increasing Interest in AI

AI underwent a resurgence in the 1980s. An expansion of available algorithms and more funding were behind the resurgence. Deep learning techniques became popular as they enabled machines to learn from experience. Similarly, introducing expert systems allowed computer programs to behave like human experts when making decisions.

These expert systems interviewed real-life expert professionals about their responses to given situations. After learning these responses, these intelligent systems will provide recommendations to non-experts based on their learning.

From 1982 to 1990, the Japanese government provided funding for AI-related programs. The Fifth Generation Computer Project (FGCP) aimed to innovate computer processing, improve artificial intelligence, and implement logic programming. Despite the $400 million investment, the program fell short of its goals.

But the lack of government support did not affect continuing development of modern AI. Many landmark AI objectives were achieved between the 1990s and 2000s.

Advances in AI Technology

Deep Blue defeated grandmaster and reigning world chess champion, Gary Kasparov, in 1997. The chess-playing computer program developed by IBM proved that AI decision-making programs make giant leaps.

Dragon Systems also developed speech recognition software for Windows in the same year. The program showed the advances made in spoken language interpretation. It also showed that machines could handle anything. Cynthia Breazeal also developed Kismet, a robot that can recognize and show emotions.

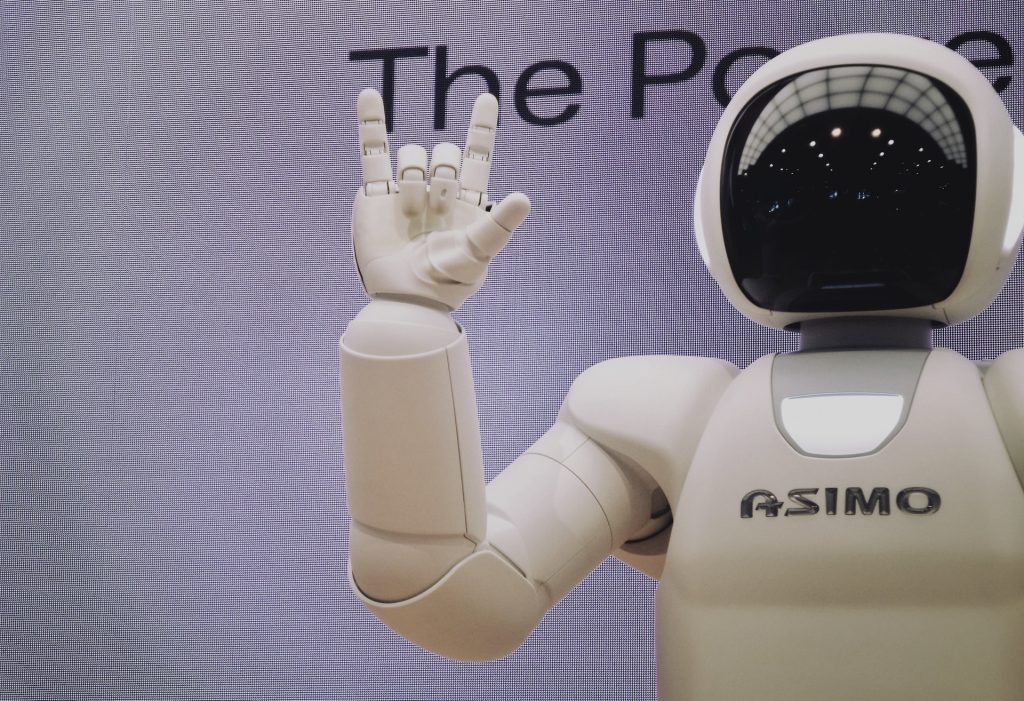

In 2000, Honda introduced ASIMO, a robot model with artificial intelligence that can walk just as quickly as a human. The robot can also serve customers in restaurants by delivering trays of food.

AI Technology Today

Although AI is not a recent development, businesses are starting to recognize its potential. Entrepreneurs, executives, and employees in different industries will see more opportunities as AI revolutionizes the business world like never before.

Here are some trends to look out for in the AI technology space:

Generative Artificial Intelligence

Generative AI focuses on generating content like text, images, and music. It also facilitates text-to-image generation. This AI branch has several purposes, including creating educational materials and art.

Generative language models can generate text that sounds natural and grammatically correct. The content also suits a specific style or topic. The program can also solve problems and adapt to different situations.

Reinforcement Learning

In reinforcement learning, data scientists hone in on decision-making and reward-based training. By studying its environment and making adjustments accordingly, this branch of machine learning allows computers to learn like humans. It goes through a process of trial and error while mimicking human learning to achieve its objective.

Reinforcement learning helps individuals make difficult decisions. It is used in different industries, including data science, financial training, and games.

Multimodal Learning

Multimodal learning uses sensory input from multiple modes like text, images, video, sound and speech. These systems can learn jointly from text and images to easily understand complex concepts. Similarly, machines use data from different sources to produce more accurate results than if they used data from only a single source.

Mimicking the way humans learn, multimodal learning is key to helping machines develop a richer understanding of the world. AI models can accurately identify patterns and relationships between objects and events by taking multiple input forms. It will ultimately lead to better results for humans.

AI has come a long way since its inception. Despite early failures, the technology has continued to develop and prove its worth in various industries. Today, AI is being used more than ever and will only become more prevalent.